Load Balancing ✨

Ever wondered how you can seamlessly browse the Internet with not much delay in loading of the webpages?🤔 Most users of the web are blissfully unaware of the sheer scale of the process responsible for bringing content across the Internet. There is literally miles of distance between you and the server you’re accessing. So how do you ensure that your site won’t burst into figurative flames as page visits skyrocket? Consider employing a technique called Load Balancing.

What Exactly is Load Balancing?! 🤔⚖⏱

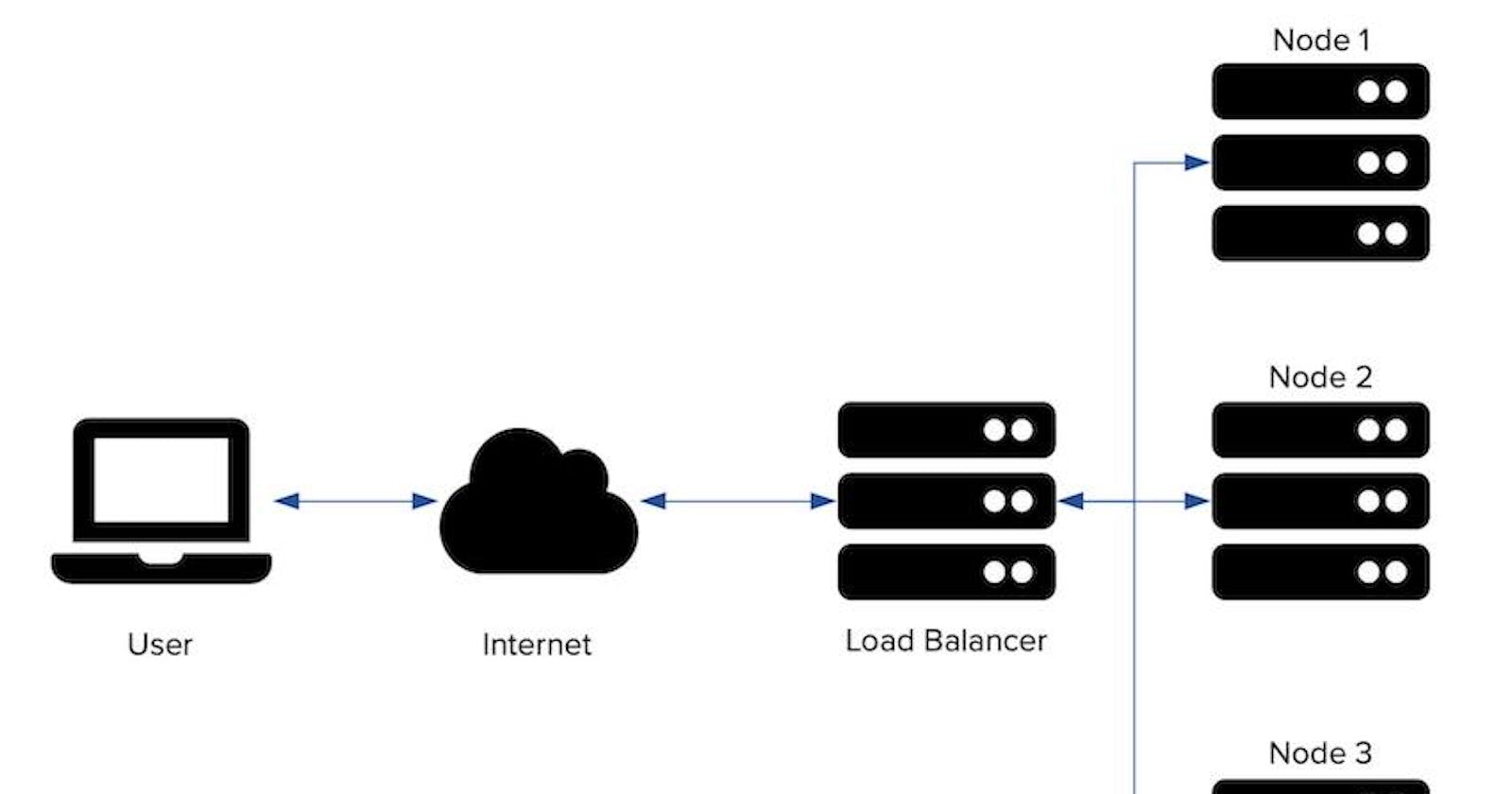

Load balancing refers to efficiently distributing incoming network traffic across a group of backend servers, also known as a server farm or server pool. Load balancing distributes server loads across multiple resources — most often across multiple servers. The technique aims to reduce response time, increase throughput, and in general speed things up for each end user. The role of a load balancer is sometimes likened to that of a traffic cop, as it is meant to systematically route requests to the right locations at any given moment, thereby preventing costly bottlenecks and unforeseen incidents. Load balancers should ultimately deliver the performance and security necessary for sustaining complex IT environments, as well as the intricate workflows occurring within them.

Load Balancing Algorithms📝

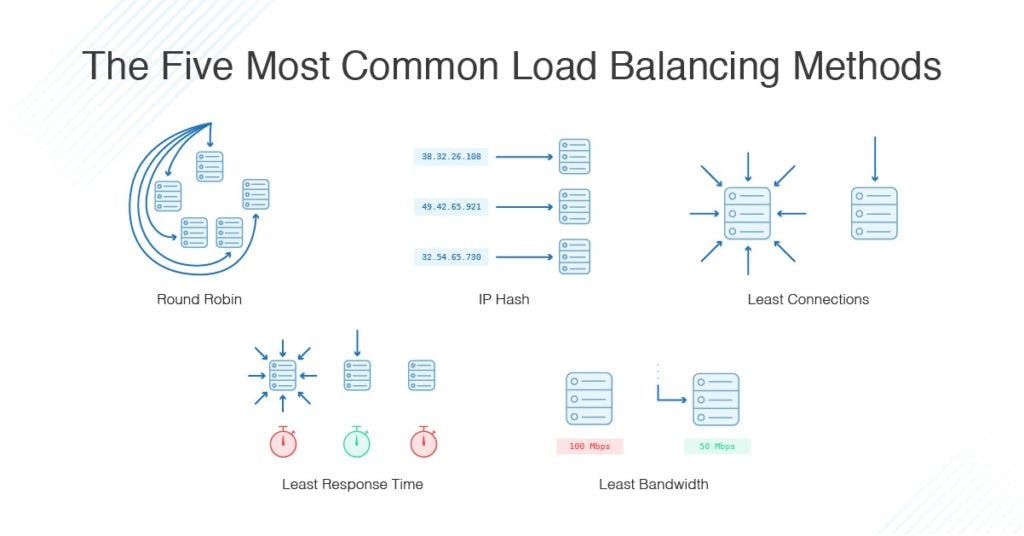

Different load balancing algorithms provide different benefits; the choice of load balancing method depends on your needs:

Round Robin - Round robin load balancing is a simple way to distribute client requests across a group of servers. A client request is forwarded to each server in turn. The algorithm instructs the load balancer to go back to the top of the list and repeats again.

Least Connections - Least Connection load balancing is a dynamic load balancing algorithm where client requests are distributed to the application server with the least number of active connections at the time the client request is received.

IP Hash - The IP Hash policy uses an incoming request's source IP address as a hashing key to route non-sticky traffic to the same backend server. The load balancer routes requests from the same client to the same backend server as long as that server is available.

Least Response Time - directs traffic to the server with the fewest active connections and the lowest average response time.

Least Bandwidth - The back end server is decided by the load balancer depending on the bandwidth consumption for the last fourteen seconds. The associated server which consumes the least bandwidth is selected. Similarly, at least packets method, the server transmitting the least packets receive new requests from the load balancer.

What are the types of load balancing?

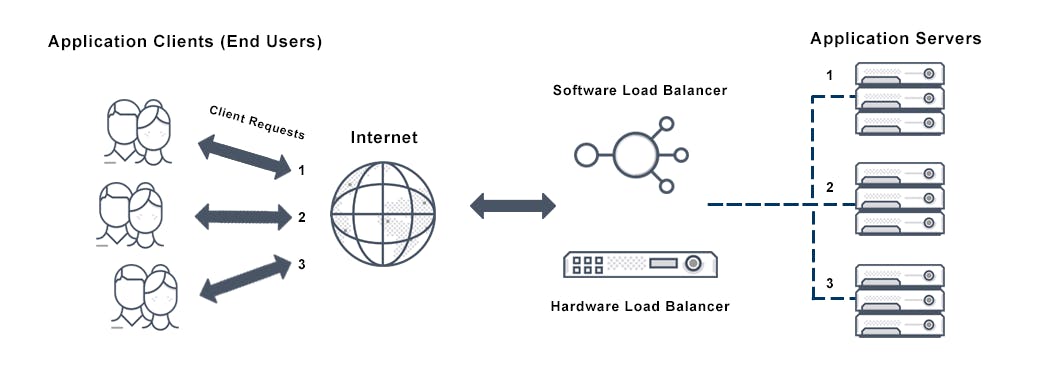

Software-based load balancers may be installed directly onto a server, or they may be purchased as load balancer as a service (LBaaS). With LBaaS, the service provider is responsible for installing, configuring, and managing the load balancing software. The software-based load balancer may be located on-premises or off.

Like servers, load balancing appliances can be physical or virtual. Physical (hardware load balancing) and virtual (software load balancing) appliances both evaluate client requests and server usage in real time and send requests to different servers based on a variety of algorithms. Where the traffic is sent depends on the load balancing policy set by the administrator.

Advantages of Using Load Balancers💪👍

Naming abstraction: the client addresses the load balancer through a predefined mechanism and then the activity of name resolution can be delegated to the load balancer. This kind of mechanism includes built-in libraries and well known DNS/IP/port locations.

Fault tolerance: through the healt checking and various algorithmic techniques, the load balancer can route around a bad or overloaded backend. This means that an operator can fix the problem with the needed time instead of treat it as an emergency.

Cost and performance benefits: distributed system networks are rarely homogeneous. Probably the system span different zones and regions and between zones, oversubscription is normal. An intelligent load balancing is able to keep traffic requests within the zones as much as possible, so performance increased (less latency) and the cost of the whole system is reduced (less bandwidth and fiber needed between zones).

Disadvantages of Using Load Balancers👎😕

- Difficult to set up for network administrators who are new to sticky sessions.

- Problems can be difficult to diagnose.

- The load-balancer/router must be load-balanced itself, or it becomes a point of failure that will take down an entire cluster.

- Cannot provide global load-balancing (whereas round-robin DNS can).

- Session-failover is often not implemented, as there is reduced need. If a server goes offline, all users lose their session.

💥✨The best load balancers can handle session persistence as needed. Another use case for session persistence is when an upstream server stores information requested by a user in its cache to boost performance. Switching servers would cause that information to be fetched for the second time, creating performance inefficiencies